When adding more variablesto a model, you need to think about the cause-and-effect assumptions thatimplicitly go with them, and you should also look at how their addition changesthe estimated coefficients of other variables. And do the residual statsand plots indicate that the model’s assumptions are OK? If they aren’t, then youshouldn’t be obsessing over small improvements in R-squared anyway. For example,if the model’s R-squared is 90%, the variance of its errors is 90% lessthan the variance of the dependent variable and the standard deviation of itserrors is 68% less than the standard deviation of the dependent variable.

How To Interpret R-squared and Goodness-of-Fit in Regression Analysis

This process may involve conducting thorough exploratory data analysis or using techniques like stepwise regression or regularization to select the optimal set of variables. In general, if you are doing predictive modeling and you want to get a concrete sense for how wrong your predictions are in absolute terms, R² is not a useful metric. Metrics like MAE or RMSE will definitely do a better job in providing information on the magnitude of errors your model makes. This is useful in absolute terms but also in a model comparison context, where you might want to know by how much, concretely, the precision of your predictions differs across models.

Regression Analysis: How Do I Interpret R-squared and Assess the Goodness-of-Fit?

This is simply the sum of squared errors of the model, that is the sum of squared differences between true values y and corresponding model predictions ŷ. R-squared cannot determine whether the coefficient estimates and predictions are biased, which is why you must assess the residual plots. In general, the higher the R-squared, the better the model fits your data.

Combining R-squared with Other Metrics

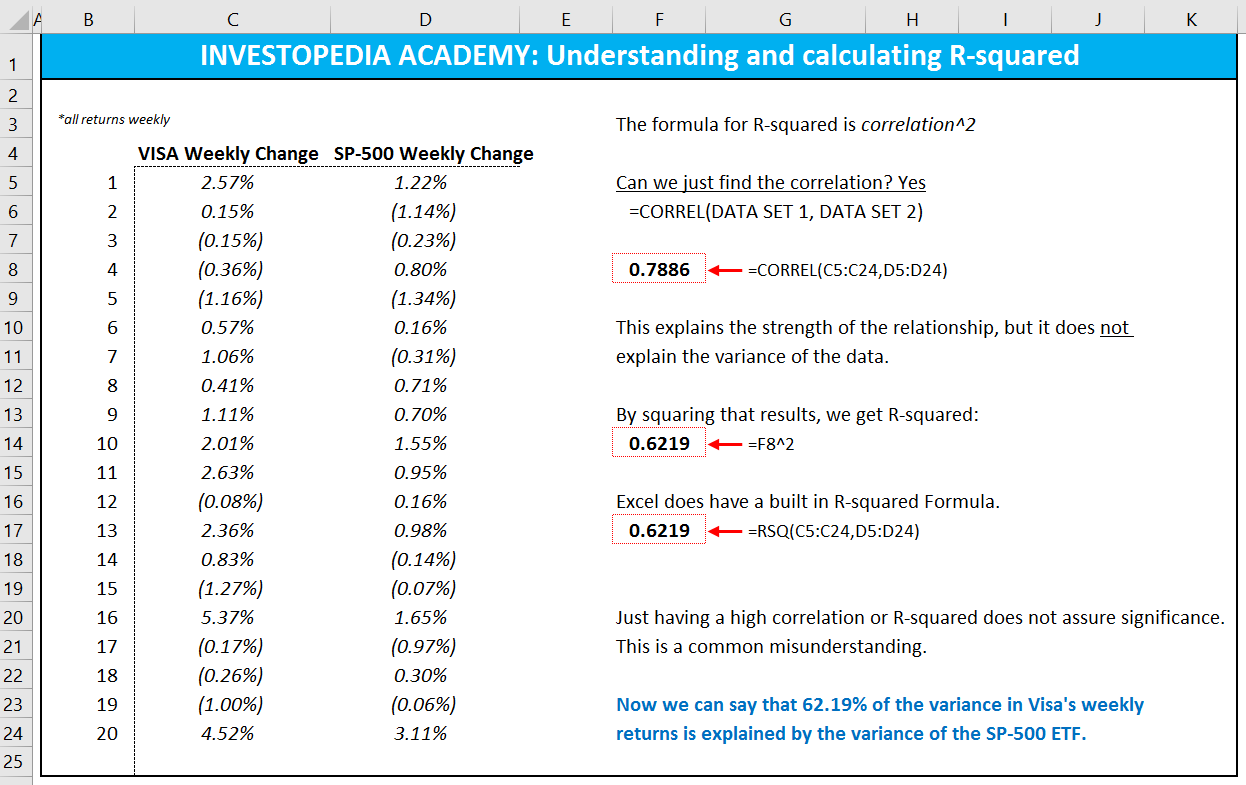

A result like this couldsave many lives over the long run and be worth millions of dollars in profitsif it results in the drug’s approval for widespread use. R-squared is a statistical measure in regression analysis that indicates the proportion of the variance in the dependent variable that is predictable from the independent variables. Essentially, it’s a score that reflects how well the data fit the regression model, with a value of 1 indicating a perfect fit and 0 indicating no predictive power.

For example, if a stock or fund has an R-squared value of close to 100%, but has a beta below 1, it is most likely offering higher risk-adjusted returns. Yarilet Perez is an experienced multimedia journalist and fact-checker with a Master of Science in Journalism. She has worked in multiple cities covering breaking news, politics, education, and more. Her expertise is in personal finance and investing, and real estate. Anecdotally, this is also what the vast majority of students trained in using statistics for inferential purposes would probably say, if you asked them to define R². But, as we will see in a moment, this common way of defining R² is the source of many of the misconceptions and confusions related to R².

- (The latter issue is not the bottomline, but it is a step in the direction of fixing the model assumptions.) Most interestingly, the deflated incomedata shows some fine detail that matches up with similar patterns in the salesdata.

- To gain a better understanding of adjusted R-squared, check out the following example.

- In some fields, it is entirely expected that your R-squared values will be low.

- R-squared is a statistical measure in regression analysis that indicates the proportion of the variance in the dependent variable that is predictable from the independent variables.

For example, if the observed and predicted values do not appear as a cloud formed around a straight line, then the R-Squared, and the model itself, will be misleading. Similarly, outliers can make the R-Squared statistic be exaggerated or be much smaller than is appropriate to describe the overall pattern in the data. The problem with both of these questions it that it is just a bit silly to work out if a model is good or not based on the value of how do you interpret r squared the R-Squared statistic. Sure it would be great if you could check a model by looking at its R-Squared, but it makes no sense to do so. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike.

Adjusted R-squaredis an unbiased estimate of thefraction of variance explained, taking into account the sample size and numberof variables. Usually adjustedR-squared is only slightly smaller than R-squared, but it is possible foradjusted R-squared to be zero or negative if a model with insufficientlyinformative variables is fitted to too small a sample of data. An increasein R-squared from 75% to 80% would reduce the error standard deviation by about10% in relative terms. That beginsto rise to the level of a perceptible reduction in the widths of confidenceintervals. But don’t forget, confidence intervals are realistic guides tothe accuracy of predictions only if themodel’s assumptions are correct.

Furthermore, good or bad R² values, as we have observed, can be driven by many factors, from overfitting to the amount of noise in your data. If we simply analyse the definition of R² and try to describe its general behavior, regardless of which type of model we are using to make predictions, and assuming we will want to compute this metrics out-of-sample, then yes, they are all wrong. Interpreting R² as the proportion of variance explained is misleading, and it conflicts with basic facts on the behavior of this metric. If R² is not a proportion, and its interpretation as variance explained clashes with some basic facts about its behavior, do we have to conclude that our initial definition is wrong? Are Wikipedia and all those textbooks presenting a similar definition wrong? It depends hugely on the context in which R² is presented, and on the modeling tradition we are embracing.